Demo

📝 Abel is created as a tribute to Niels Henrik Abel for his groundbreaking work in algebra and analysis, at which our model is relatively better as well. There is still a long way for us to go, though 🏃♂️🏃♀️🏁🏃♂️🏃♀️.

We show that:

We have established a new state-of-the-art performance across open-source LLMs (that do not use external tools) on the GSM8k (83.62) and MATH (28.26) benchmarks. Specifically:

GAIRMath-Abel secures 3 positions in the Top 10 rankings and stands as the only university-led project in the list (others are either star startups or big tech companies).We demonstrate that:

| Model Name | HF Checkpoints | GSM8k | MATH | License |

|---|---|---|---|---|

| GAIRMath-Abel-70B | 🤗 70B | 83.62 (+ 2.02) | 28.26 (+ 5.56) | Llama 2 |

| GAIRMath-Abel-13B | 🤗 13B | 66.41 (+ 2.51) | 17.34 (+ 3.34) | Llama 2 |

| GAIRMath-Abel-7B | 🤗 7B | 59.74 (+ 4.84) | 13.00 (+ 2.40) | Llama 2 |

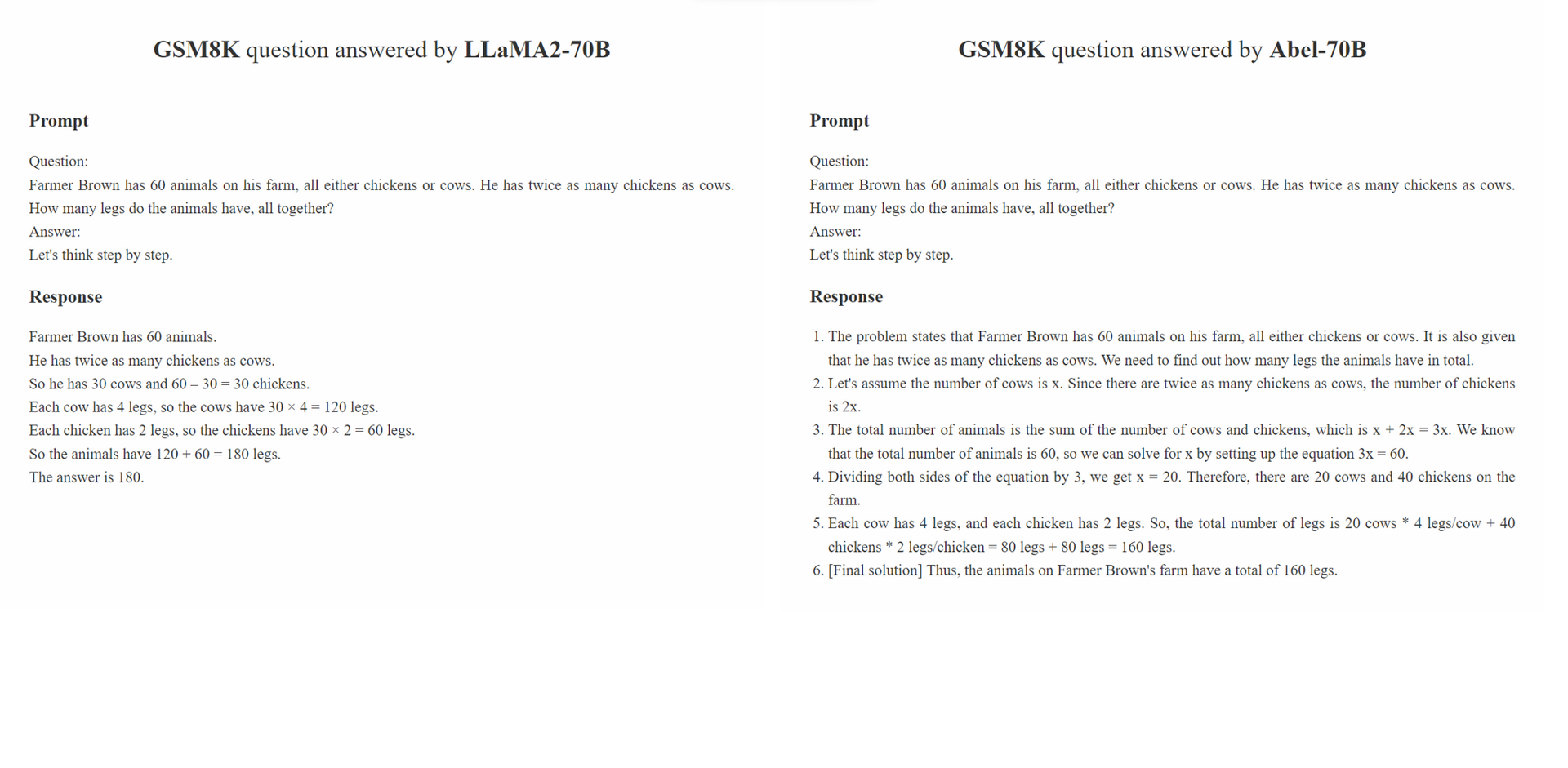

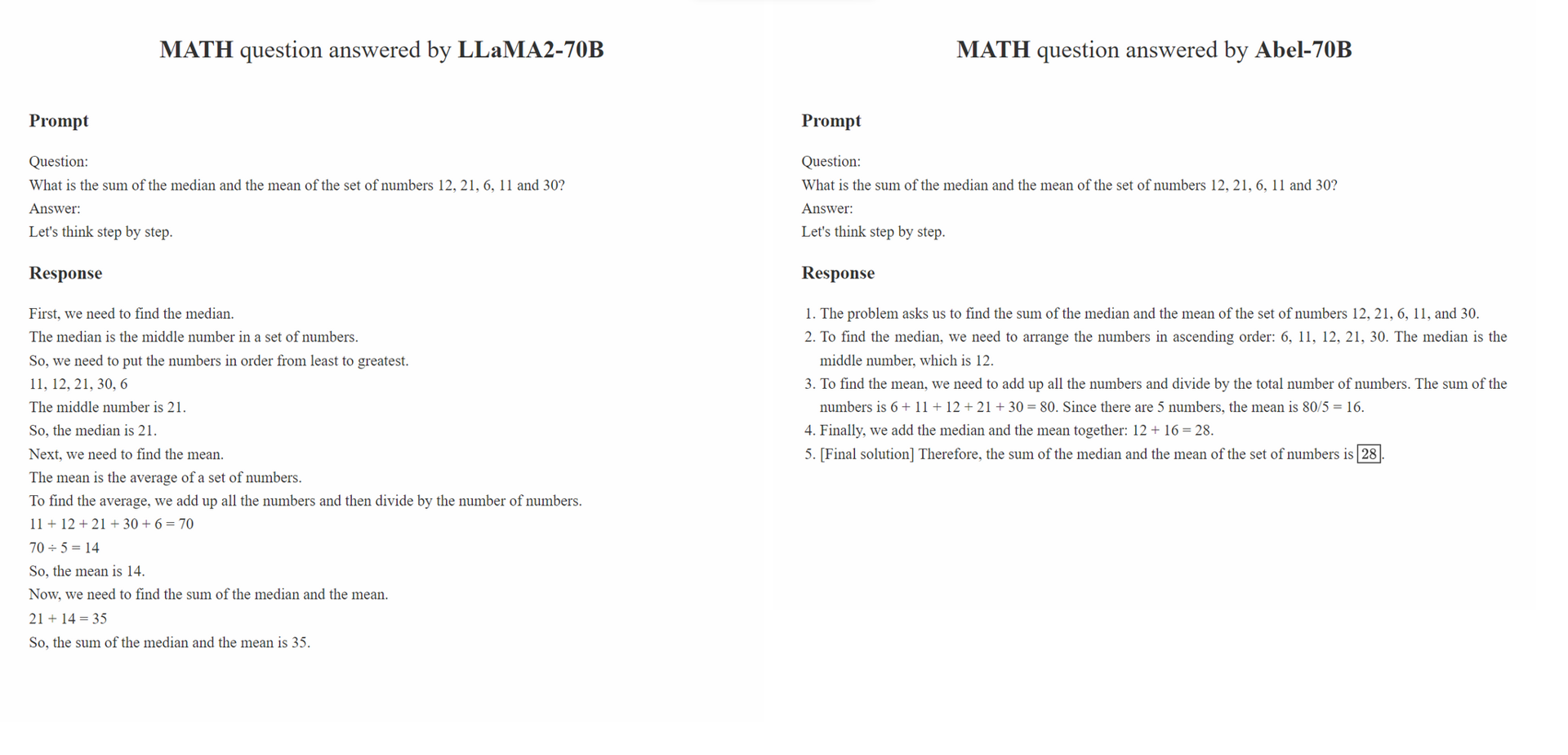

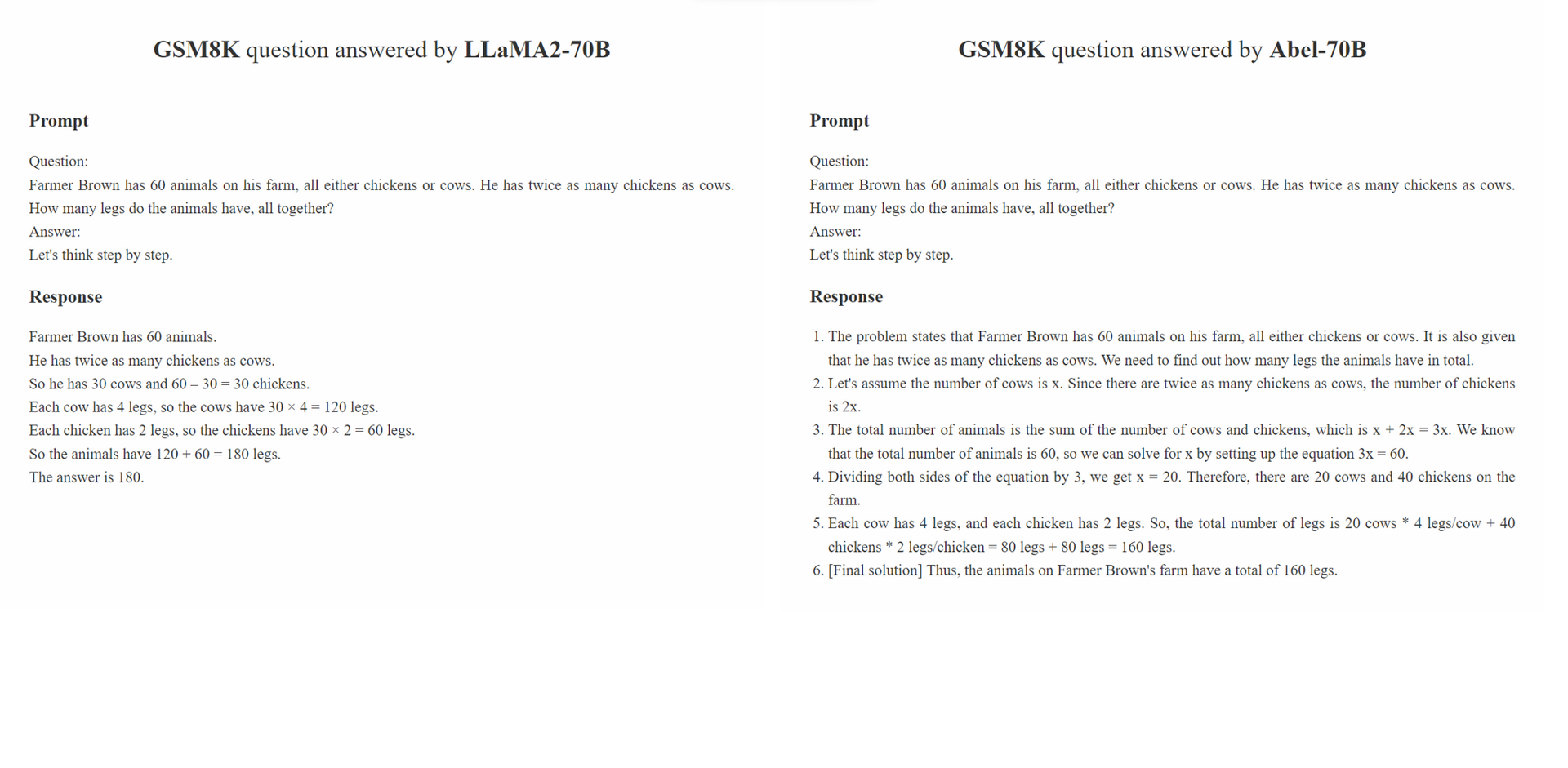

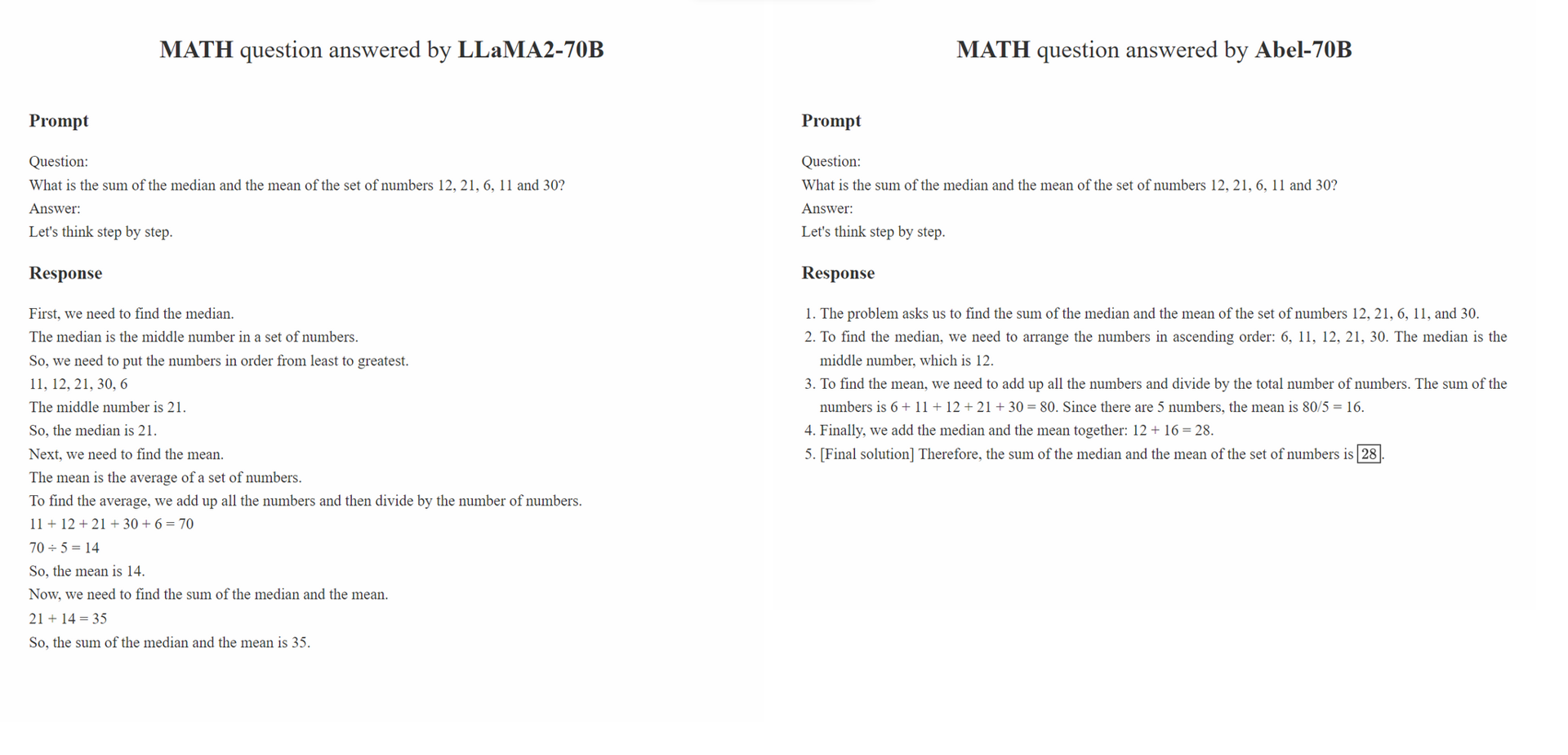

We propose Parental Oversight, A Babysitting Strategy for Supervised Fine-tuning,

Parental Oversight is not limited to any specific data processing method. Instead, it defines the data processing philosophy that should guide supervised fine-tuning in the era of Generative AI (GAI). We believe that in the era of GAI, data structure engineering has emerged as a new paradigm. Within this paradigm, the manner in which the fine-tuning data is processed significantly impacts the performance of the trained GAI. We expect a growing number of studies in the community to focus on this data processing philosophy.

The principle of Parental Oversight emphasizes treating supervised fine-tuning with care and prudence. This is analogous to the way parents are encouraged to educate their children. Different types of data, along with their presentation formats (e.g., step-by-step reasoning, iterative refinement), can be likened to varied educational methods. Just as parents cautiously select the most effective approach to instruct their children, GAI practitioners should cautiously select the most effective data processing approaches to better instruct their LLMs.

Furthermore, the "the more data, the better" philosophy doesn't always hold true. The quality and relevance of annotated samples can often outweigh their quantity. Training samples used in SFT should not just present the right answer, but also instruct the model on how the correct answer was derived based on the knowledge of the LLM. Additionally, if the LLM's knowledge is not sufficient to answer a question, Parental Oversight should step in to address the knowledge gaps promptly.

🔒 stands for the proprietary model while 🌍 represents the open-source model

🎓 suggests that model development is led by academic university (instead of companies)

We only consider models without using any tool (e.g., Python)

| Ranking | Model | Param. | Leading Organization | GSM8K | MATH |

|---|---|---|---|---|---|

| 🔒 1 | GPT-4 | unknown | OpenAI | 92.0 | 42.5 |

| 🔒 2 | Claude-2 | unknown | Anthropic | 88.0 | - |

| 🔒 3 | PaLM-2-Flan | unknown | 84.7 | 33.2 | |

| 🌍 4 | GAIRMath-Abel | 70B | 🎓 GAIR Lab at Shanghai Jiaotong University | 83.6 | 28.3 |

| 🌍 5 | WizardMath | 70B | Microsoft | 81.6 | 22.7 |

| 🔒 6 | Claude-Instant | unknown | Anthropic | 80.9 | - |

| 🔒 7 | ChatGPT | unknown | OpenAI | 80.8 | 34.1 |

| 🔒 8 | ChatGPT-0301 | unknown | OpenAI | 74.9 | - |

| 🌍 9 | GAIRMath-Abel | 13B | 🎓 GAIR Lab at Shanghai Jiaotong University | 66.4 | 17.3 |

| 🌍 10 | GAIRMath-Abel | 7B | 🎓 GAIR Lab at Shanghai Jiaotong University | 59.7 | 13.0 |

| 🔒 11 | Minerva | 540B | 58.8 | 33.6 | |

| 🔒 12 | PaLM | 540B | 56.9 | 8.8 | |

| 🌍 13 | Llama-2 | 70B | Meta | 56.8 | 13.5 |

| 🌍 14 | RFT | 33B | OFA | 56.5 | 7.4 |

| 🌍 15 | Baichuan2-13B | 13B | Baichuan | 52.8 | 10.1 |

| 🔒 16 | Minerva | 62B | 52.4 | 27.6 | |

| 🌍 17 | PaLM | 64B | 52.4 | 4.4 | |

| 🔒 18 | RFT | 13B | OFA | 52.1 | 5.1 |

| 🔒 19 | LlaMA | 65B | Meta | 50.9 | 10.6 |

| 🌍 20 | QWen | 7B | Alibaba | 44.9 | 8.5 |

| 🌍 21 | Chinchilla | 70B | DeepMind | 43.7 | - |

| 🔒 22 | Llama-2 | 34B | Meta | 42.2 | 6.24 |

| 🔒 23 | Galactica | 30B | Meta | 41.7 | 12.7 |

| 🌍 24 | ChatGLM2 | 12B | Zhipu | 40.9 | - |

| 🔒 25 | Text-davinci-002 | 175B | OpenAI | 40.7 | 19.1 |

| 🔒 26 | Llama | 33B | Meta | 35.6 | 7.1 |

| 🌍 27 | GPT-3 | 175B | OpenAI | 34 | 5.2 |

| 🌍 28 | InternLM | 7B | Shanghai AI Lab | 31.2 | - |

| 🌍 29 | Llama-2 | 13B | Meta | 28.7 | 3.9 |

| 🔒 30 | Vicuna v1.3 | 13B | LMSys | 27.6 | - |

| 🌍 31 | Falcon | 40B | Technology Innovation Institute | 19.6 | 2.5 |

| 🔒 32 | Llama | 13B | Meta | 17.8 | 3.9 |

| 🔒 33 | MPT | 30B | MosaicML | 15.2 | 3.1 |

| 🔒 34 | Galactica | 6.7B | Meta | 10.2 | 2.2 |

@misc{abel,

author = {Chern, Ethan and Zou, Haoyang and Li, Xuefeng and Hu, Jiewen and Feng, Kehua and Li, Junlong and Liu, Pengfei},

title = {Generative AI for Math: Abel},

year = {2023},

publisher = {GitHub},

journal = {GitHub repository},

howpublished = {\url{https://github.com/GAIR-NLP/abel}},

}