What does O1’s Thought Look Like?

Official Example

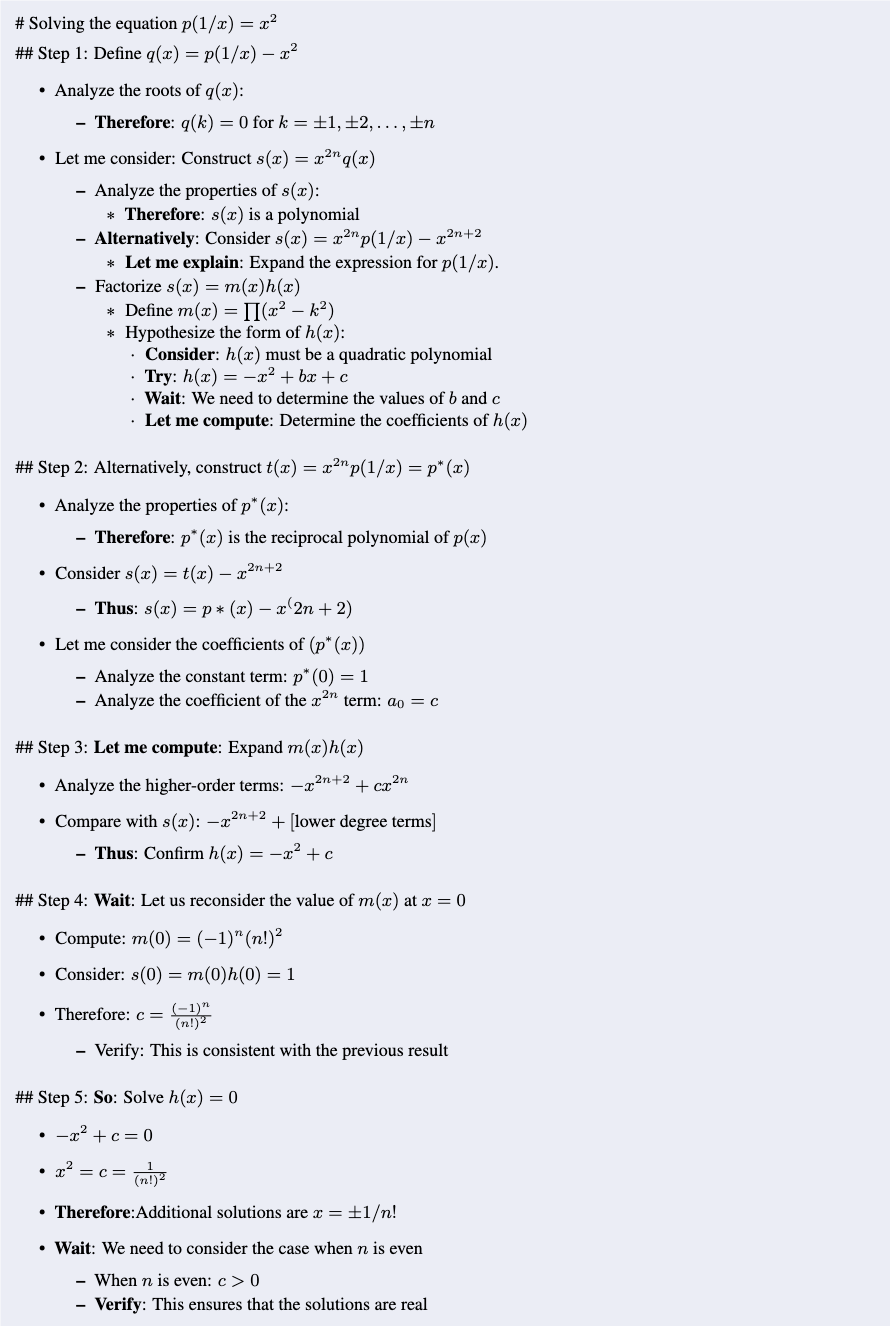

The original example is the Math example from the OpenAI Blog.

|

|---|

| The Thought Structure of OpenAI O1 in Mathematical Reasoning. |

Analysis

| Example | Token Count | Line Count | Avg. Words per Line | Keyword Count |

|---|---|---|---|---|

| Cipher | 8915 | 668 | 4.29 | So: 31, First: 27, of: 27, Alternatively: 21, Second: 19, Third: 15, But: 15, Wait: 13, Alternatively perhaps: 13, Let: 12, and: 12, let: 11, first: 9, Now: 9, Think step: 8, step by: 8, by step: 8, So the: 8, the first: 8, Similarly: 6, as: 6, or: 5, we need to: 5, Option: 4, maybe: 4 |

| Coding | 3259 | 197 | 3.64 | and: 8, as: 7, then: 4, For: 4, Now: 4, So: 3, Let: 3, Since: 3, We: 3, row=: 3, step by: 2, by step: 2, We need: 2, Let me: 2, step by step: 2, We need to: 2, Let me try: 2 |

| Crossword | 5311 | 396 | 5.75 | Across: 37, So: 33, From: 31, and: 25, first: 19, Position: 19, we: 15, Now: 13, Possible: 13, as: 7, But: 7, Similarly: 7, third: 7, First: 6, So we: 6, Given: 5, Now let: 5, We: 4 |

| English | 757 | 49 | 9.88 | the: 20, that: 15, to: 15, is: 13, because: 8, why: 7, Option because: 5, and: 4 |

| Health Science | 1010 | 86 | 6.14 | and: 11, So: 5, Also: 3, But: 3, First: 2, Then: 2, So the: 2, but also: 2 |

| Math | 18751 | 521 | 9.49 | Therefore: 48, But: 42, So: 38, Thus: 36, Similarly: 33, we: 26, and: 17, since: 16, for: 15, real: 15, Wait: 14, Let: 10, but: 9, Let me: 9, all: 8, k1: 8, Given: 8, Wait but: 8, Alternatively: 7, we can: 7, So the: 7, Then: 6, Given that: 6 |

| Safety | 510 | 41 | 8.27 | So: 6, and: 65, But: 3, Also: 2, ChatGPT: 1, Write: 1, Explain: 1 |

| Science | 2411 | 91 | 7.62 | and: 14, can: 6, compute: 6, But: 5, So: 4, Now: 3, Given: 2, but: 2, so: 2, Alternatively: 2 |

Statistical summary of various examples from OpenAI O1’s thought process across different domains. The table presents key metrics including the token count, the line count, the average number of words per line, and the frequency of the highest-occurring words or phrases derived using the n-gram algorithm. These keywords reflect the structure and style of the reasoning process, highlighting how the model introduces logical steps, alternatives, or corrections in different contexts.

Our analysis focuses on O1’s reasoning examples provided by OpenAI, which includes eight instances of problem-solving steps for complex tasks. We categorized these examples by problem type and difficulty, observing that as problem difficulty increases, so does the length of the model’s responses in both tokens and lines. This trend suggests that more challenging problems require more reasoning steps.

Beyond just response length, we analyzed keyword frequencies. Words like “consider,” “if,” and “possible” frequently appear in more complex problems, indicating the model is exploring multiple solution paths. Keywords such as “wait” and “alternatively” signal the model’s ability to reflect and self-correct, suggesting a deeper, non-linear reasoning process.

Additionally, we manually reviewed O1’s approach to solving mathematical problems, revealing a methodical thought process. The model uses iterative problem-solving techniques, breaking down complex equations, and frequently reassessing its steps for consistency. It also tests multiple hypotheses and concludes by verifying its final solution, ensuring accuracy and reliability in its reasoning.